Apache Beam’s integration with Google BigQuery through Dataflow provides a robust, scalable, and flexible way to handle large-scale data ingestion and processing. BigQuery Sink, a core feature of this integration, allows developers to write processed data to BigQuery efficiently. This guide delves into the key concepts and features of the BigQuery Sink, including its write methods, configurations, schema management, time partitioning, and dynamic destinations.

Write Methods

BigQuery Sink supports multiple methods for writing data. Each method offers distinct trade-offs in terms of performance, cost, and guarantees. Here’s a breakdown of the four primary write methods:

1. STORAGE_WRITE_API

- Description: The STORAGE_WRITE_API is a high-performance method optimized for streaming and batch writes directly into BigQuery. It provides strong consistency and low-latency writes.

- Key Features:

- Schema Evolution: Supports schema updates during writes.

- Atomicity: Writes are atomic and consistent.

- Recommended Use Case: High-throughput use cases where latency and reliability are critical.

Limitations: May incur higher costs compared to other methods due to its advanced features.

import org.apache.beam.sdk.Pipeline;

import org.apache.beam.sdk.io.gcp.bigquery.BigQueryIO;

import org.apache.beam.sdk.values.Row;

public class StorageWriteAPIExample {

public static void main(String[] args) {

Pipeline pipeline = Pipeline.create();

pipeline

.apply("Create data", Create.of(

new TableRow().set("id", 1).set("name", "Alice"),

new TableRow().set("id", 2).set("name", "Bob")))

.apply("Write to BigQuery",

BigQueryIO.writeTableRows()

.to("project:dataset.table")

.withWriteDisposition(BigQueryIO.Write.WriteDisposition.WRITE_APPEND)

.withCreateDisposition(BigQueryIO.Write.CreateDisposition.CREATE_IF_NEEDED)

.withMethod(BigQueryIO.Write.Method.STORAGE_WRITE_API)

.withSchema(new TableSchema().setFields(Arrays.asList(

new TableFieldSchema().setName("id").setType("INTEGER"),

new TableFieldSchema().setName("name").setType("STRING")

))));

pipeline.run().waitUntilFinish();

}

}

2. STORAGE_API_AT_LEAST_ONCE

- Description: This method uses BigQuery’s Storage API for at-least-once delivery semantics. It ensures data reaches BigQuery but may result in duplicate records.

- Key Features:

- Optimized for streaming pipelines.

- Lower overhead compared to the STORAGE_WRITE_API.

- Recommended Use Case: Scenarios where throughput is more critical than deduplication.

3. FILE_LOADS

- Description: This method stages data into temporary files (e.g., Cloud Storage) before loading them into BigQuery in bulk. It’s best suited for batch pipelines.

- Key Features:

- Handles large volumes of data efficiently.

- Cost-effective for batch processing.

- Recommended Use Case: Batch pipelines with periodic data loads.

BigQueryIO.writeTableRows()

.to("project:dataset.table")

.withSchema(new TableSchema()

.setFields(Arrays.asList(

new TableFieldSchema().setName("id").setType("INTEGER"),

new TableFieldSchema().setName("value").setType("STRING")

)))

.withWriteDisposition(BigQueryIO.Write.WriteDisposition.WRITE_TRUNCATE)

.withCreateDisposition(BigQueryIO.Write.CreateDisposition.CREATE_IF_NEEDED)

.withMethod(BigQueryIO.Write.Method.FILE_LOADS);

4. STREAMING_INSERTS

- Description: Directly inserts rows into BigQuery via the streaming API.

- Key Features:

- Low-latency data ingestion.

- Immediate data availability in BigQuery.

- Recommended Use Case: Real-time analytics where data freshness is paramount.

- Limitations: Limited throughput and potential throttling at high scales.

Create Disposition and Write Disposition

Create Disposition

- CREATE_IF_NEEDED: Creates a new BigQuery table if it doesn’t exist.

- CREATE_NEVER: Fails the pipeline if the target table does not exist.

- Use Case: Choose CREATE_IF_NEEDED for dynamic pipelines or CREATE_NEVER for strict table management.

Write Disposition

- WRITE_TRUNCATE: Overwrites the entire table.

- WRITE_APPEND: Appends data to the existing table.

- WRITE_EMPTY: Ensures the table is empty before writing; otherwise, the pipeline fails.

- Use Case: Use WRITE_TRUNCATE for periodic refreshes, WRITE_APPEND for incremental updates, and WRITE_EMPTY for idempotent writes.

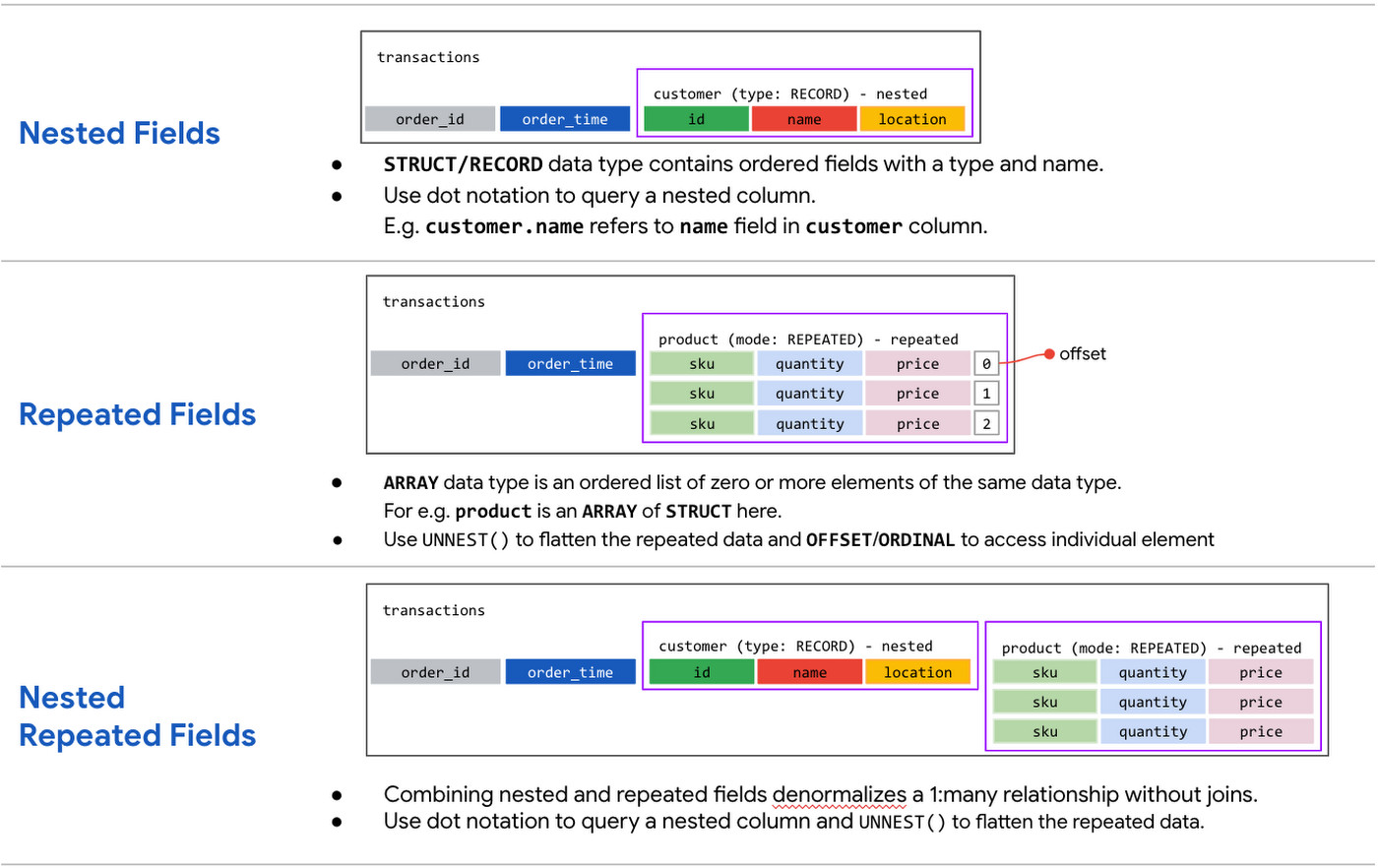

Schema Management

Schema management ensures that the data written to BigQuery adheres to the defined table schema. BigQuery Sink supports:

- Automatic Schema Detection:

- Automatically infers schemas from the input data if the target table doesn’t exist.

- Useful for dynamic pipelines with varying data structures.

- Schema Updates:

- Supported by the STORAGE_WRITE_API for scenarios where the input schema evolves over time.

- Recommended to predefine schemas for consistency and predictability.

- Schema Validation:

- Enforces schema compatibility between the input data and the BigQuery table.

- Helps prevent runtime errors caused by schema mismatches.

Time Partitioning

Time partitioning organizes data into partitions based on a timestamp column, significantly improving query performance and cost-efficiency.

Types of Time Partitioning:

- Day Partitioning: Creates daily partitions based on the timestamp column.

- Hourly Partitioning: Useful for high-frequency data.

- Custom Range Partitioning: Enables fine-grained control over partition intervals.

Configuration:

Specify the partitioning field in the pipeline:

Benefits:

- Faster queries due to smaller data scans.

- Cost savings by querying only relevant partitions.

Dynamic Destinations

Dynamic Destinations allow pipelines to write data to different BigQuery tables based on runtime logic, offering unparalleled flexibility.

How it Works:

- Use withDynamicDestinations() in your BigQuery Sink configuration.

- Define a DestinationFunction to determine the target table for each record.

- Optionally, define a TableSchemaFunction to customize schemas for each destination.

Use Case:

- Writing data to multiple tables based on customer ID, region, or any business-specific logic.

Benefits:

- Simplifies the handling of heterogeneous datasets.

- Reduces pipeline complexity by encapsulating logic for destination selection.

Error Handling

Redirect Failed Inserts

Use dead-letter tables to capture failed records during ingestion.

Conclusion

The BigQuery Sink in Apache Beam offers a wide range of features to meet diverse data ingestion requirements. Whether you’re building real-time streaming pipelines or batch jobs, understanding the available write methods, configuration options, and advanced features like schema management, time partitioning, and dynamic destinations can help you design efficient and scalable data pipelines.

By leveraging these features, you can ensure that your BigQuery integration is optimized for performance, cost, and reliability. Start exploring these capabilities to unlock the full potential of your Dataflow pipelines!